During CS509: Computer Vision, a class I took while going for my MS in Robotics Engineering, we made a brief detour into Neural Radiance Fields and tried to set up our own. We utilized the paper, “NeRF: Representing Scenes as

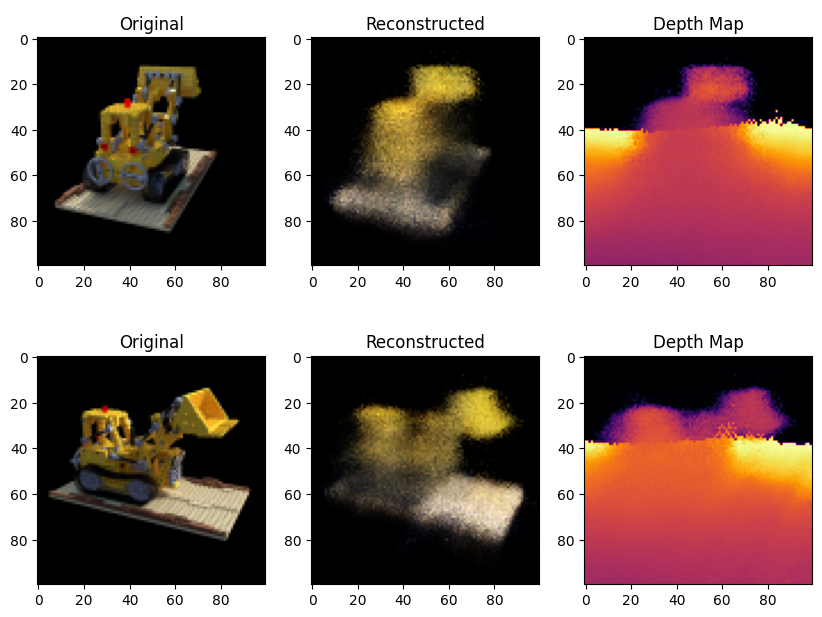

Neural Radiance Fields for View Synthesis” by B. Mildenhall, P. P. Srinivasan, M. Tancik et al. from the proceedings of ECCV 20 and example code entitled, ‘tiny nerf’ with a sample dataset from UCSD. With the sample dataset the results were impressive:

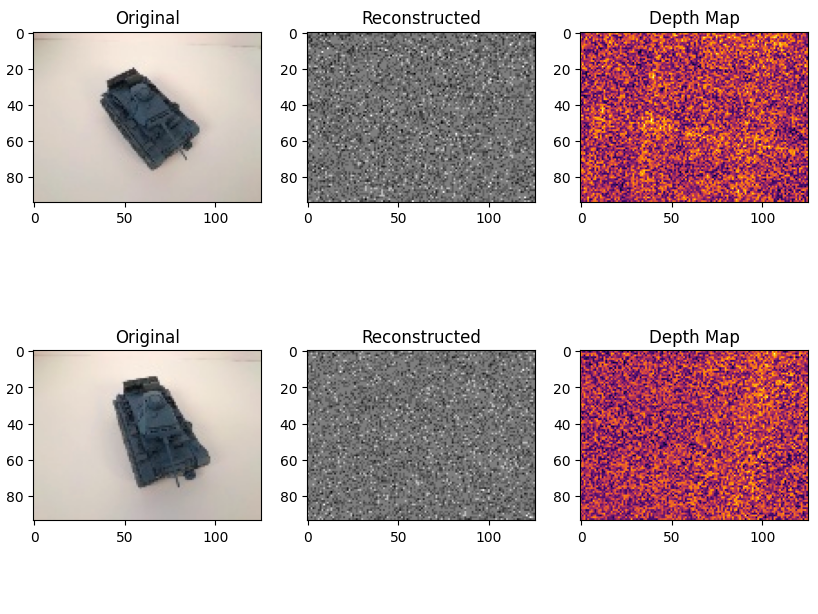

I attempted implementing my own dataset with a picture of a model tank I had lying around, but unfortunately did not achieve the same results. The loss on the model was high, and the algorithm got stuck in a local minimum that it couldn’t break from.

Looking back at it now, I believe most of the issues are with the dataset I made. This likely means the pose data that I extracted from the image set, and the background for the 3d model I used not being perfect, vice the artificial data of the building-block bulldozer being uniformly black.